Rishab K Pattnaik

AI Researcher and Electronics & Communication Engineering student at BITS, specializing in multimodal AI systems across vision, audio, and text. Have contributed to published research in medical imaging, developing wavelet-CNN architectures for bone fracture detection with 92.22% accuracy, and built production systems like Moody.AI, U-Tube-AI and EdgeSeg. Driven by curiosity about AI architectures, I actively explore emerging models like Vision Transformers, Swin Transformers, Diffusion Models, Mamba, and LLM-Seg through research paper implementation and experimentation. Proficient in PyTorch and TensorFlow, I deploy solutions using Docker and Streamlit, with expertise in model optimization and fine-tuning techniques. Through technical blogs, I bridge AI theory with practical implementation.

Currently engaged in Emergency Research within the Department of Surgery at Hamad Medical Corporation, under the guidance of Dr. Sarada Prasad Dakua, Principal Data Scientist. The work encompasses developing and refining AI-driven approaches to enhance patient triage and clinical decision-making in high-pressure emergency settings. This involvement aims to improve both clinical outcomes and operational efficiency by leveraging advanced data science techniques tailored to emergency care challenges.

As a Research Assistant in the Department of ECE at BITS Pilani, I contributed to pioneering research in medical imaging under the supervision of Dr. Rajesh Kumar Tripathy. It involves developing and applying novel deep learning models, including advanced CNNs and transformer architectures , alongside the innovative integration of wavelet-DNN techniques to enhance diagnostic accuracy from medical scans .

As a Research Intern at Indira Gandhi Center of Atomic Research, Kalpakkam, under the supervision of Raja Sekhar M (SO/E), work focused on advancing camouflaged object detection by fine-tuning Meta's Segment Anything Model (SAM) for improved identification of objects in complex visual environments. This role involved designing and implementing specialized training strategies to adapt state-of-the-art vision transformer models for challenging detection tasks.

A peer-reviewed research paper published in Healthcare Technology Letters (2025) under the supervision of Dr. Rajesh Kumar Tripathy (BITS Pilani) and Dr. Haipeng Liu (Coventry University). This work presents a novel multiband-frequency aware deep representation learning network (MFADRLN) that integrates discrete wavelet transform-based multiresolution analysis with EfficientNetV2B2 architecture for automated bone fracture detection achieving 92.22% accuracy.

Published research in Elsevier's book "Non-stationary and nonlinear data processing for automated computer-aided medical diagnosis" authored by RK Tripathy, RB Pachori, Sibashankar Padhy, Maarten De Vos (ISBN:9780443314261).

The first YouTube RAG AI that actually watches the video, not just reads transcripts. U-Tube AI analyzes both audio and visual streams to extract slides, code snippets, and diagrams that transcript-only tools ignore. Powered by intelligent OCR routing and dual-model synthesis, it achieves enterprise-quality understanding at fraction of the cost while NoteGPT, Notta, and MyMap.AI miss all visual content entirely.

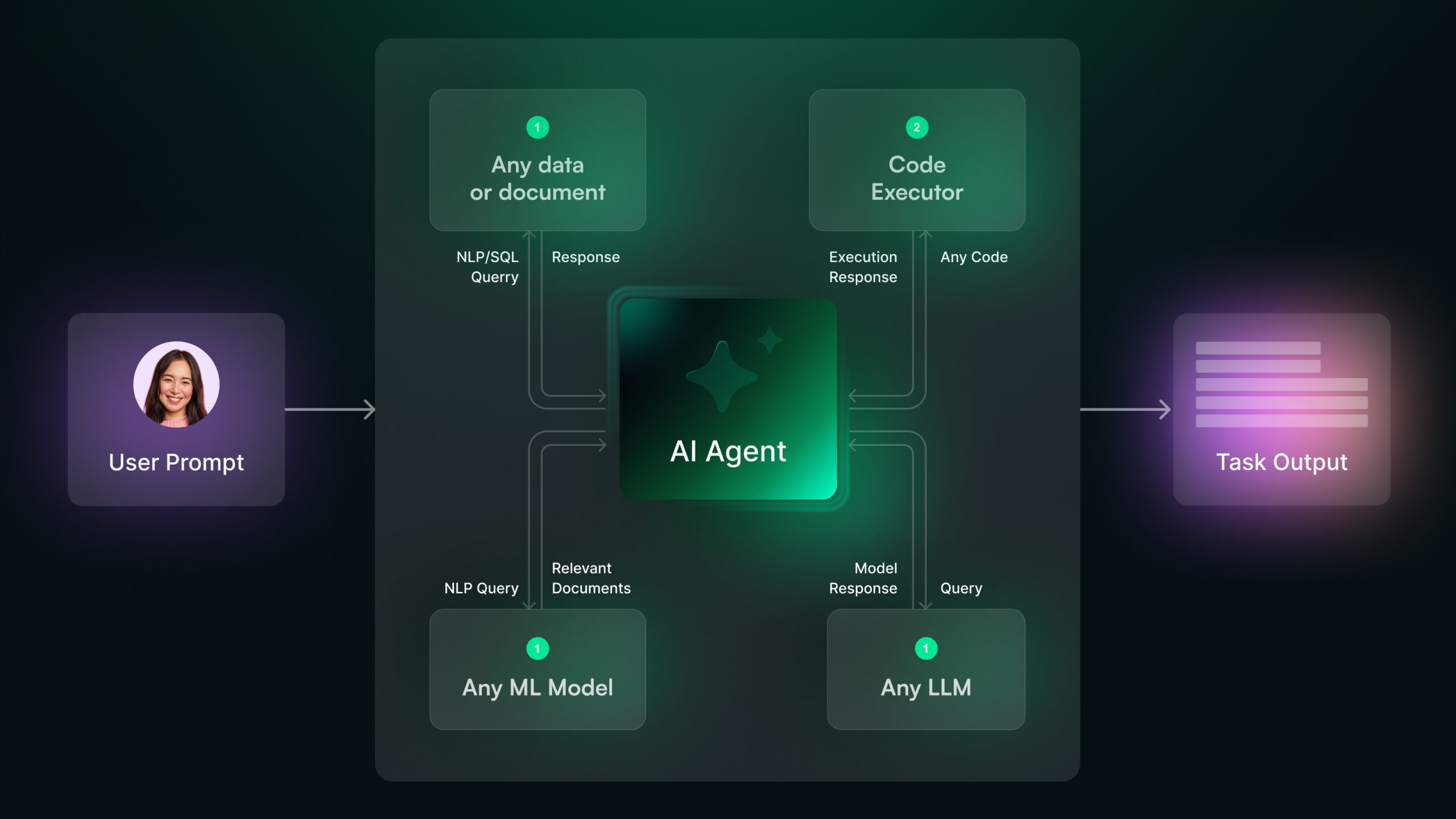

EdgeSeg-AI is a research backed image segmentation framework that democratizes advanced AI by reducing memory usage 60-70% through sequential model loading. It uses Multi-Modal Architecture, which intelligently orchestrates prompt interpretation, object detection, and mask generation for seamless user experience which enables high-quality segmentation on consumer hardware.

Moody.AI is a cutting-edge multimodal emotion recognition system analyzing video content using computer vision, audio processing, and natural language processing technologies. Combining DINOv2, fine-tuned Wav2Vec2, DistilBERT, and Whisper models through innovative trimodal fusion architecture, it achieves 61% accuracy on challenging MELD dataset with Docker deployment..

OsteoDiagnosis.AI (product of our Ongoing Research) is an innovative novel deep learning architecture integrating Advanced Signal Processing techniques for three-class Osteoporosis classification achieving 90% accuracy, successfully deployed as comprehensive Android application for accessible bone health diagnostics.

Expression.AI is a real-time facial expression recognition Android app using TensorFlow Lite and OpenCV. It classifies emotions from camera or gallery images using a custom model trained on FER2013. Its fast, efficient and less than 500 Mb. You can download the app from the APK Link inside the Pop-Up. (*Note: Its Completely Virus free)

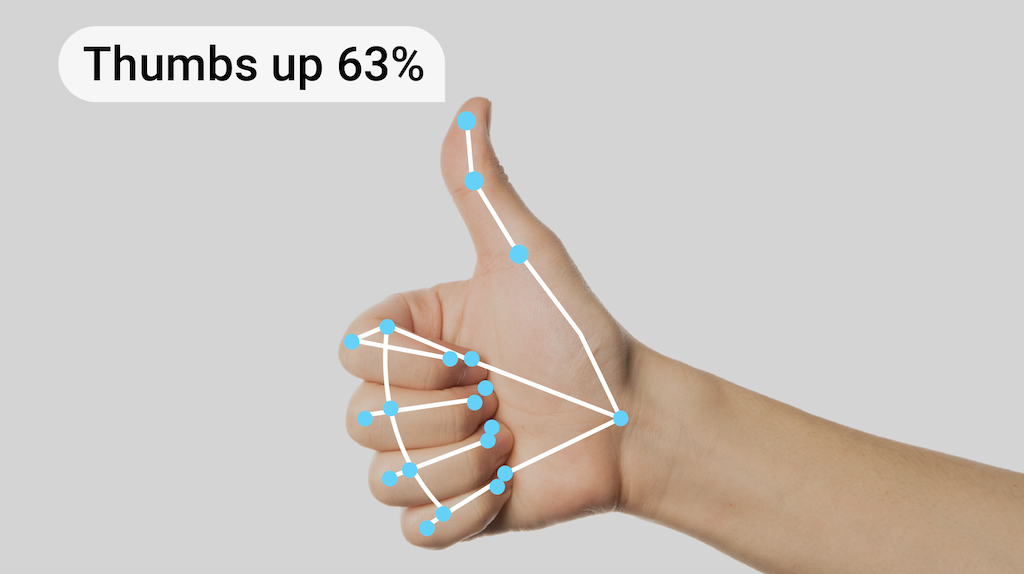

Developed an AI Hand Gesture Recognition System leveraging Apple's FastViT Vision Transformer, achieving 97.5% accuracy on the validation set. This project focuses on real-time gesture recognition for 19 distinct hand gestures, demonstrating efficient model performance for practical applications. The system utilizes transfer learning on the HaGRID dataset, making it suitable for deployment in resource-constrained environments.

Smart document processing system using DeepSeek and Llama models for intelligent analysis, extraction, and summarization of complex documents.

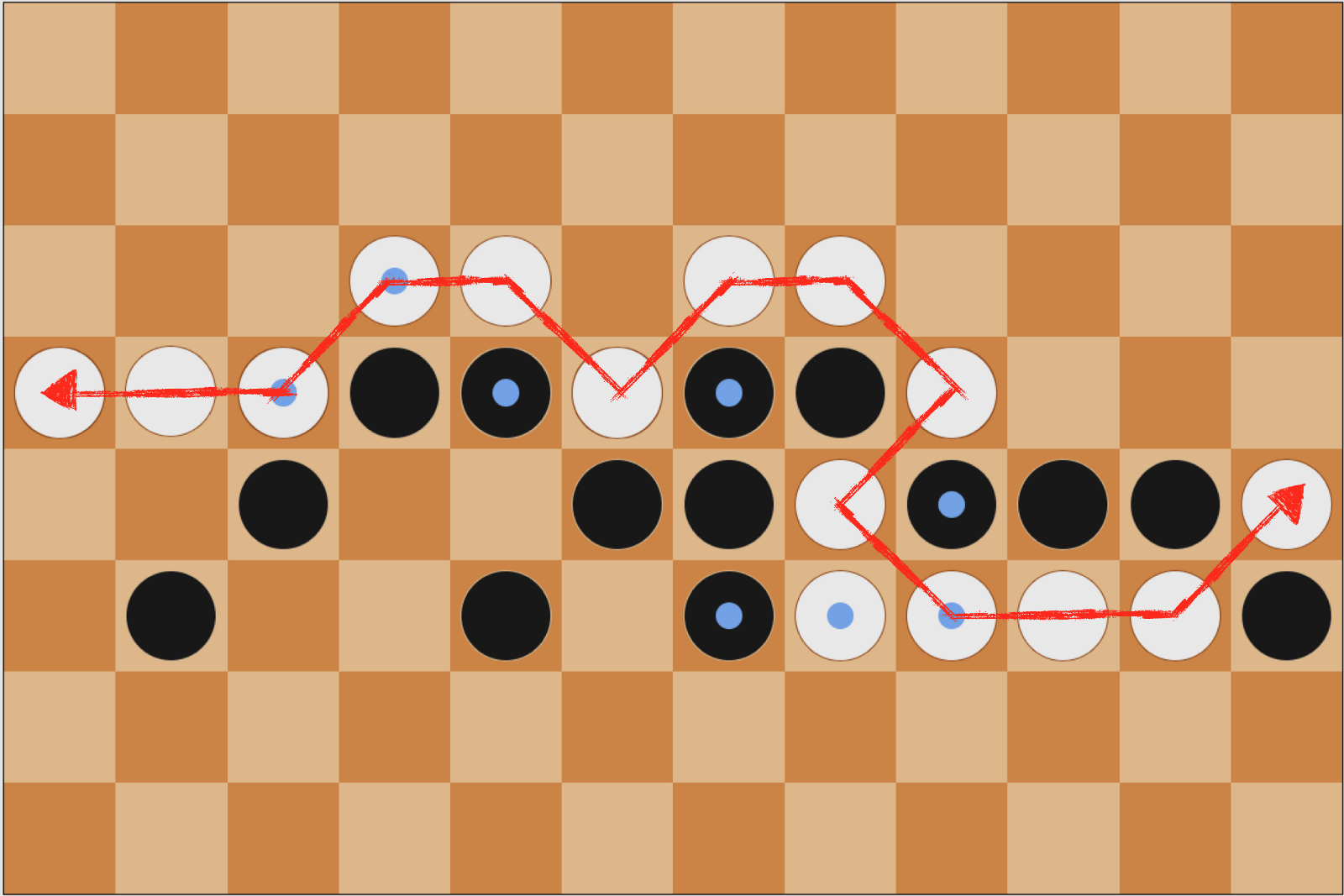

A Checkers game featuring a Smart AI using Minimax with alpha-beta pruning against a Random AI, demonstrating strategic decision-making in game development.

Deep learning-based gender detection system using CNN & InceptionV3, achieving 94.35% accuracy on the CelebA dataset with advanced augmentation techniques.

Hyderabad, India • Currently Studying

A blog on MedMamba, the first Vision Mamba architecture specifically designed for generalized medical image classification, addressing the computational limitations of traditional CNNs and Vision Transformers. Developed by Yubiao Yue and Zhenzhang Li, this groundbreaking work positions MedMamba as a superior replacement for ViTs by achieving linear computational complexity (O(N)) compared to ViTs' quadratic complexity (O(N²)), making it ideal for resource-constrained medical environments where real-time diagnosis is critical. The comprehensive technical guide explores how MedMamba leverages State Space Models (SSMs) and the innovative 2D-Selective-Scan mechanism to maintain global receptive fields while reducing FLOPs by up to 55% compared to equivalent transformer models. The blog demonstrates why practitioners should adopt MedMamba over traditional ViTs: it delivers competitive accuracy (93.7% average across medical datasets) with significantly lower memory requirements and faster inference times, making it practical for deployment in clinical settings where computational efficiency directly impacts patient care.

I am Rishab's AI Avatar here to assist you in my Portfolio Journey!